AWS EC2

How to deploy a cluster AWS EC2 with Spark and Cassandra

Context :

This tutorial presents a step-by-step guide to configure a cluster AWS EC2 with Apache Spark and Apache Cassandra.

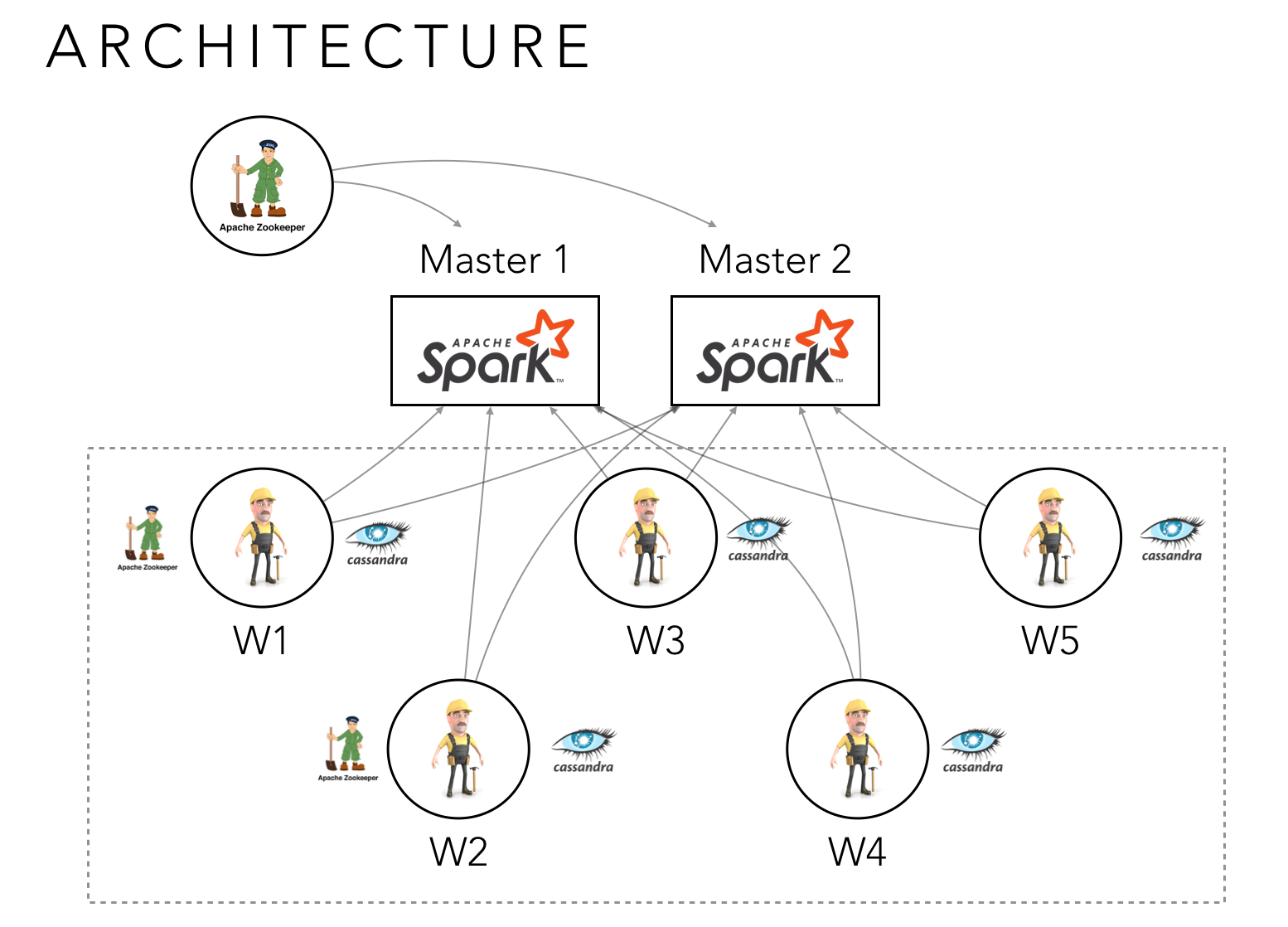

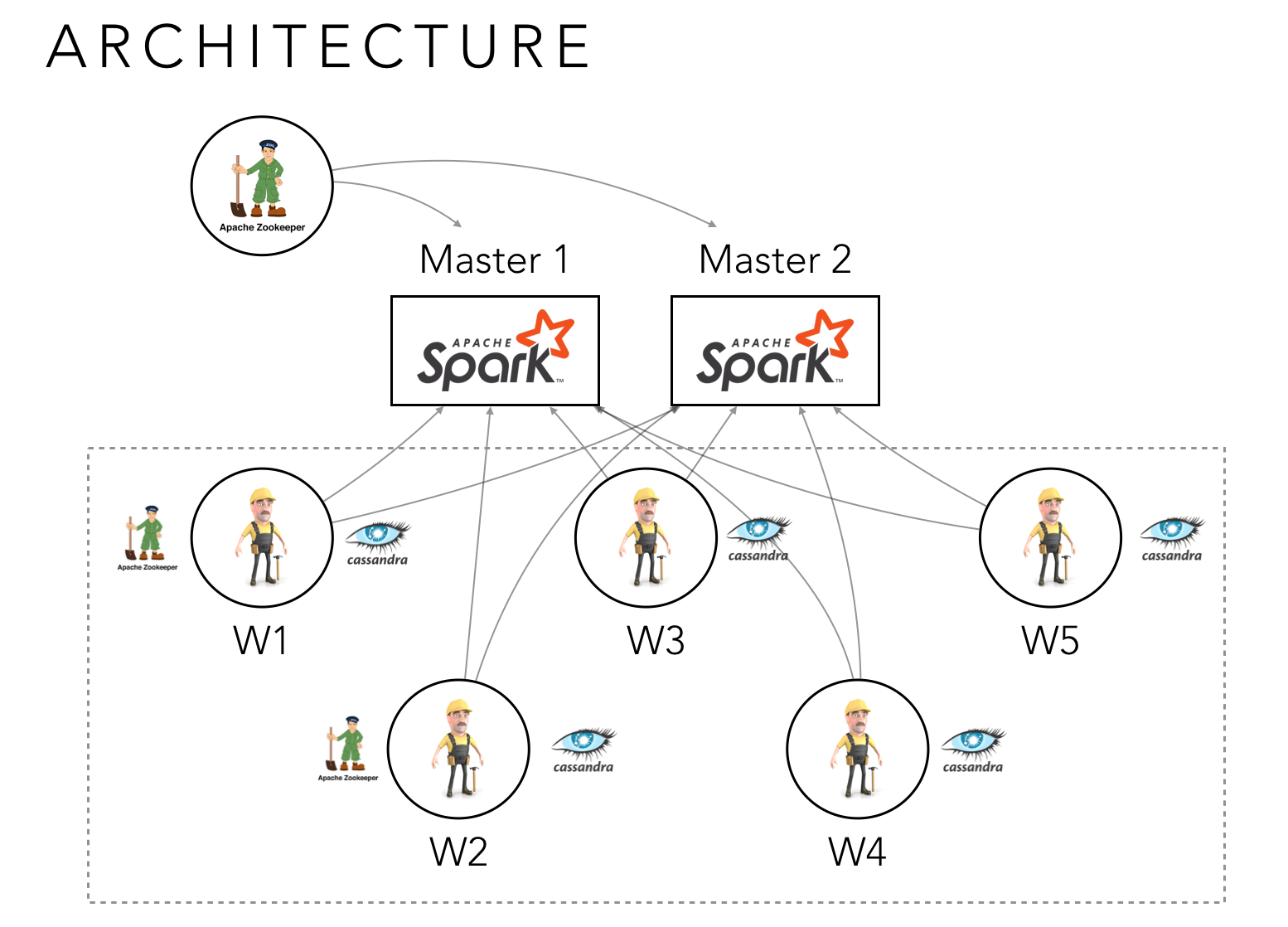

Architecture we will create :

First of all, I would like to present you the Architecture I want to deployed.

On this architecture, we’ve got 8 instances AWS EC2 :

- 2 Master nodes with apache-Spark-2.3.2

- 5 Slave nodes with apache-Spark-2.3.2 and apache-cassandra-3.11.2, including zookeeper installed on 2 of these nodes.

- The last one is a node created for the resilience of the Master. We Installed zookeeper in it.

(Slaves are also called Workers)

In terms of resilience :

- The Slaves resilience is automatically handled by the master Spark.

- The Masters resilience is handled by Zookeper.

- Zookeeper is resilient thanks to the corrum of Three nodes which contains the software.

If you want to realised this architecture, I invite you to follow (in order) the 4 tutorials bellow.

- The first one explain how to create instances on AWS EC2.

- The second deal with the installation of apache Cassandra and how to configure it

- The third explain you how to install apache Zookeeper and why.

- The last one will show you how to install apache Spark and how to configure it

Of course, you can increase the number of Master nodes and Slave nodes.

In this case, you just need to addapt your configuration files.

Amazon Elastic Compute Cloud (Amazon EC2) is a web service that provides secure, resizable compute capacity in the cloud. It is designed to make web-scale cloud computing easier for developers. This tutorial will help you get jump started with AWS EC2.

The Apache Cassandra database is the right choice when you need scalability and high availability without compromising performance. Linear scalability and proven fault-tolerance on commodity hardware or cloud infrastructure make it the perfect platform for mission-critical data. We’ll see how to configure Cassandra on an AWS EC2 cluster and create a resilient architecture that is big-data ready.

ZooKeeper is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services. All of these kinds of services are used in some form or another by distributed applications. Zookeeper is useful if you would like to secure your architecture a little more and prevent from the consequences of the fall of your Masters for example.

This topic will help you install Apache-Spark on you cluster AWS EC2.

I’ll show you how to made a standard configuration which allow your elected Master to spread its jobs on Worker nodes.